Inside Inside is an interactive installation remixing video games and cinema. In between, a neural network creates associations from its artificial understanding of the two, generating a film in real-time from gameplay using images from the history of cinema.

- Installation : Inside Inside

- Concept, Design, Development : Douglas Edric Stanley

- Technology: C++, OpenCV, Raspberry Pi 4b, Playstation 4

- Exhibition : Le champ du signe

- Dates: May 2021

- Demo: https://vimeo.com/589844238

Installation

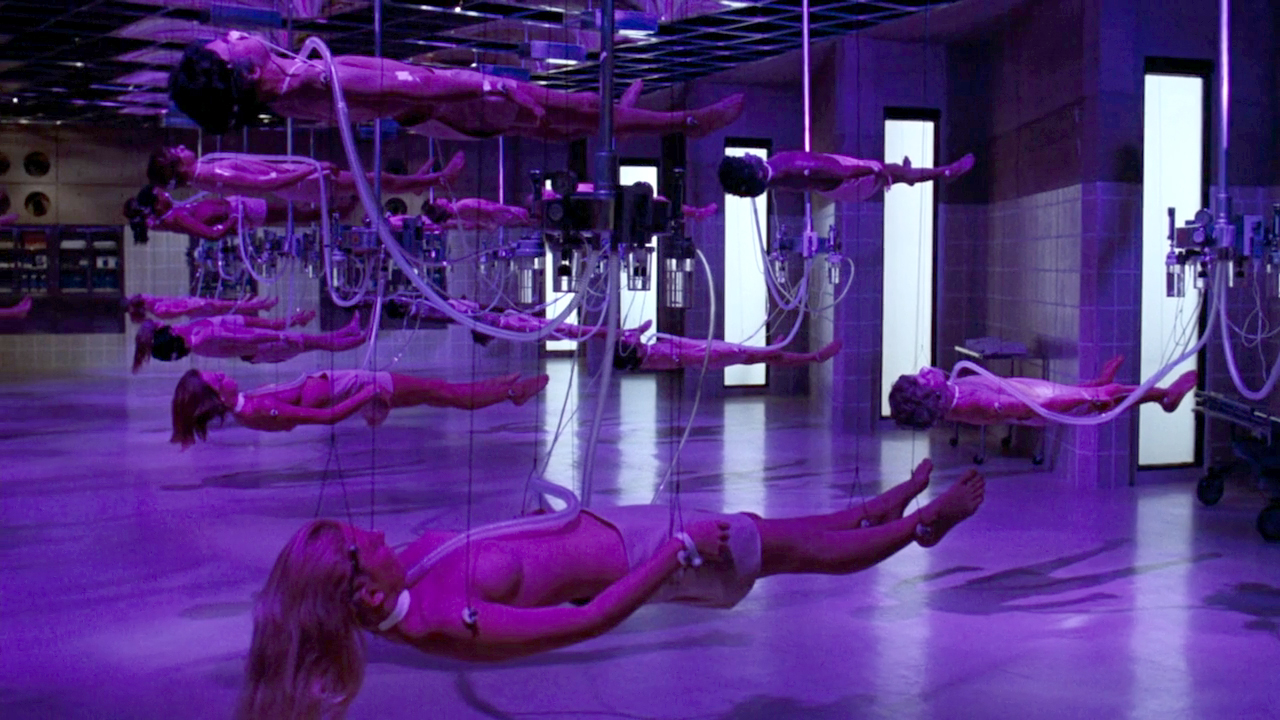

Inside Inside is the first in a series of interactive installations combining video games and cinema, as filtered through neural networks. In this first iteration of the system, players control a central character — a small boy — as he tries to survive within the dystopia of the popular video game “Inside”. In parallel, a neural network analyses in real-time the images of their gameplay and attempts to look “inside” the images emerging from their Playstation and find imagery from a curated list of eerily relevant science fiction and horror dystopia from film and television.

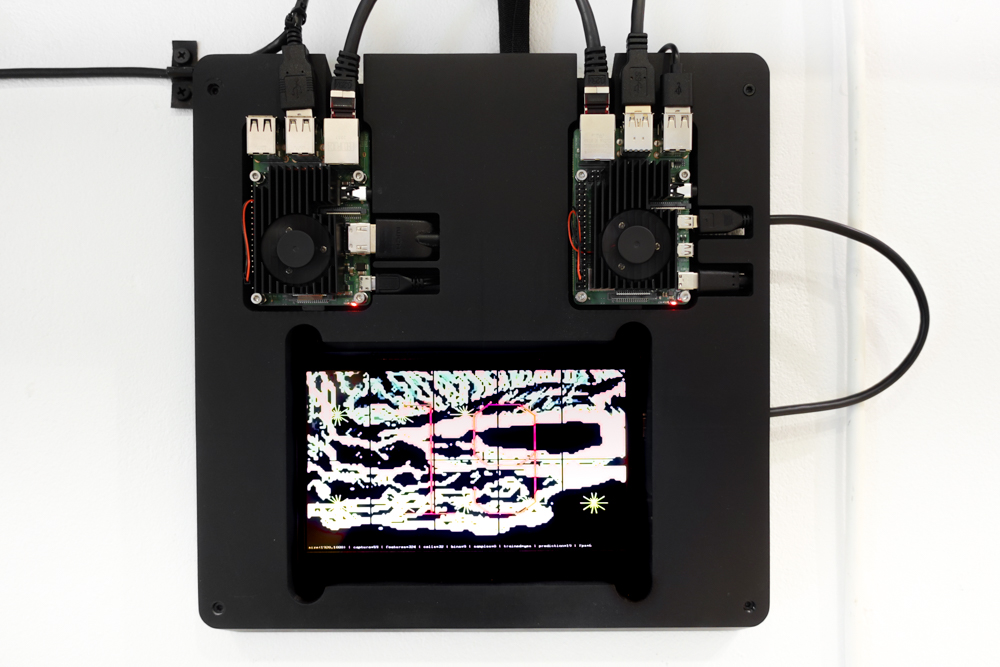

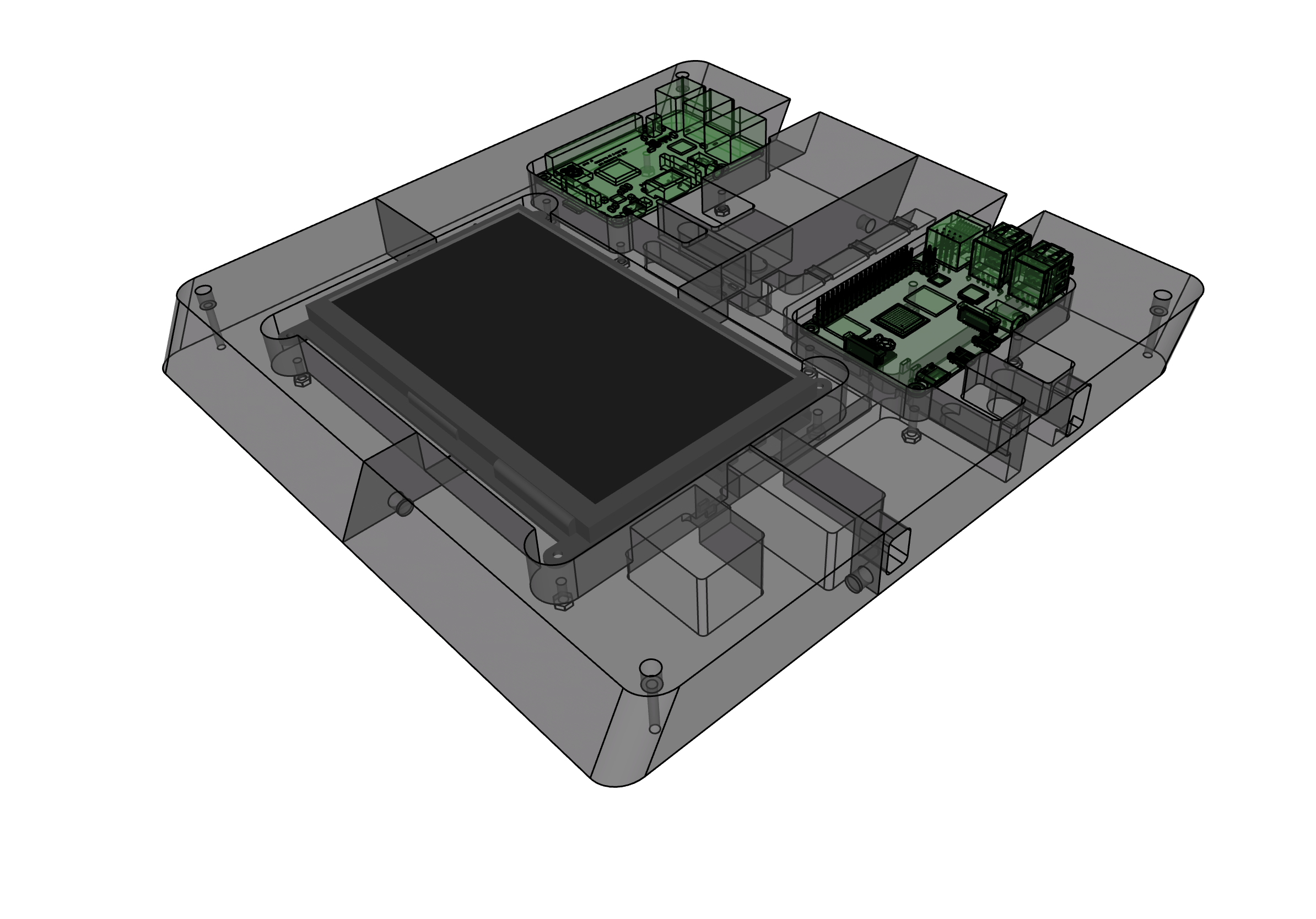

Inside Inside is an interactive experience for one player and multiple spectators. The installation can be setup in a seated environment or placed on a wall, depending on the exhibition space. There are two screens: one attached to a Playstation, and the other attached to a neural network that generates a film based on the output of the Playstation. A modified game controller is attached to the Playstation.

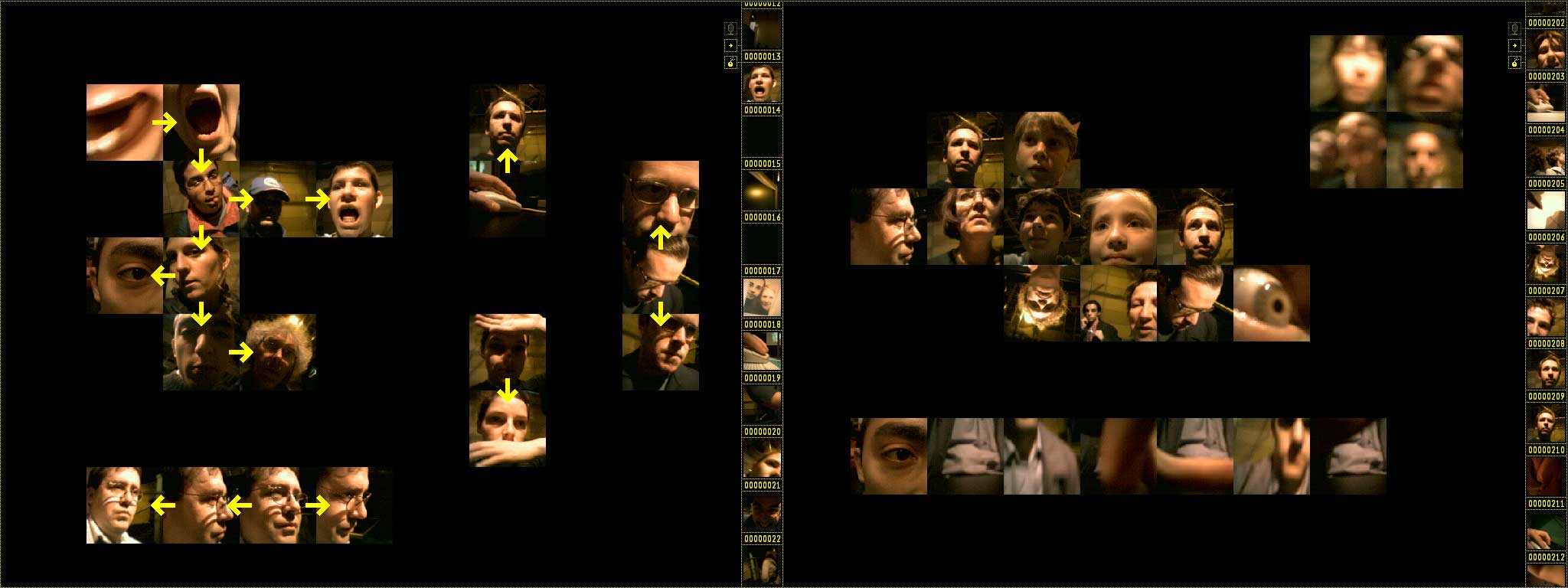

As the player evolves level by level throughout the game, the system analyses the game environment, as well as other factors, and recognizes certain features that it has “learned” to recognize via the trained neural network. It then associates these images with images on the second screen, taken from a database of curated sequences from the history of cinema. As a result, players can essentially re-sequence these images by lingering, advancing, retreating, or skipping throughout the game via the built-in “chapter” selection of the game: the timing, order and duration depends entirely on the nature of the player’s gameplay. While players evolve within the space of the gameplay, they are simultaneously editing a film.

Exhibition

There are two possible configurations of Inside Inside: a seated installation or with the monitors placed on a wall.

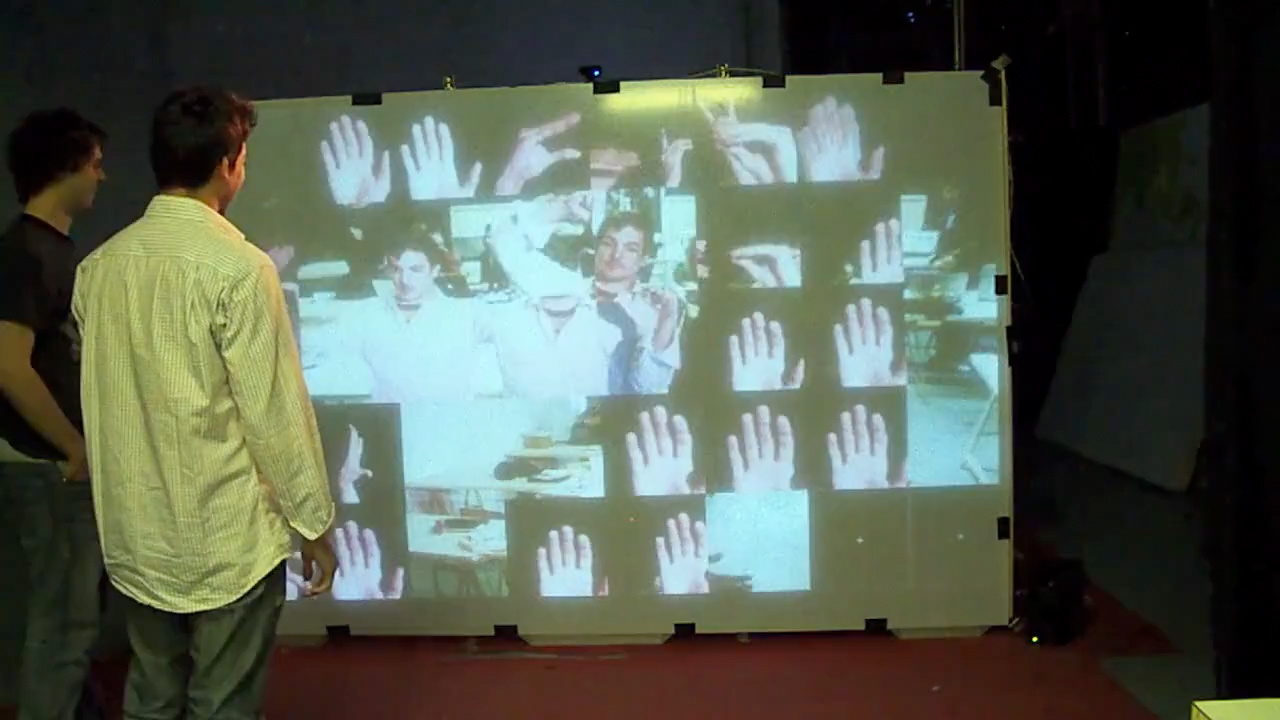

Inside Inside (wall configuration) at « Le champ du signe », May 2021

Technology

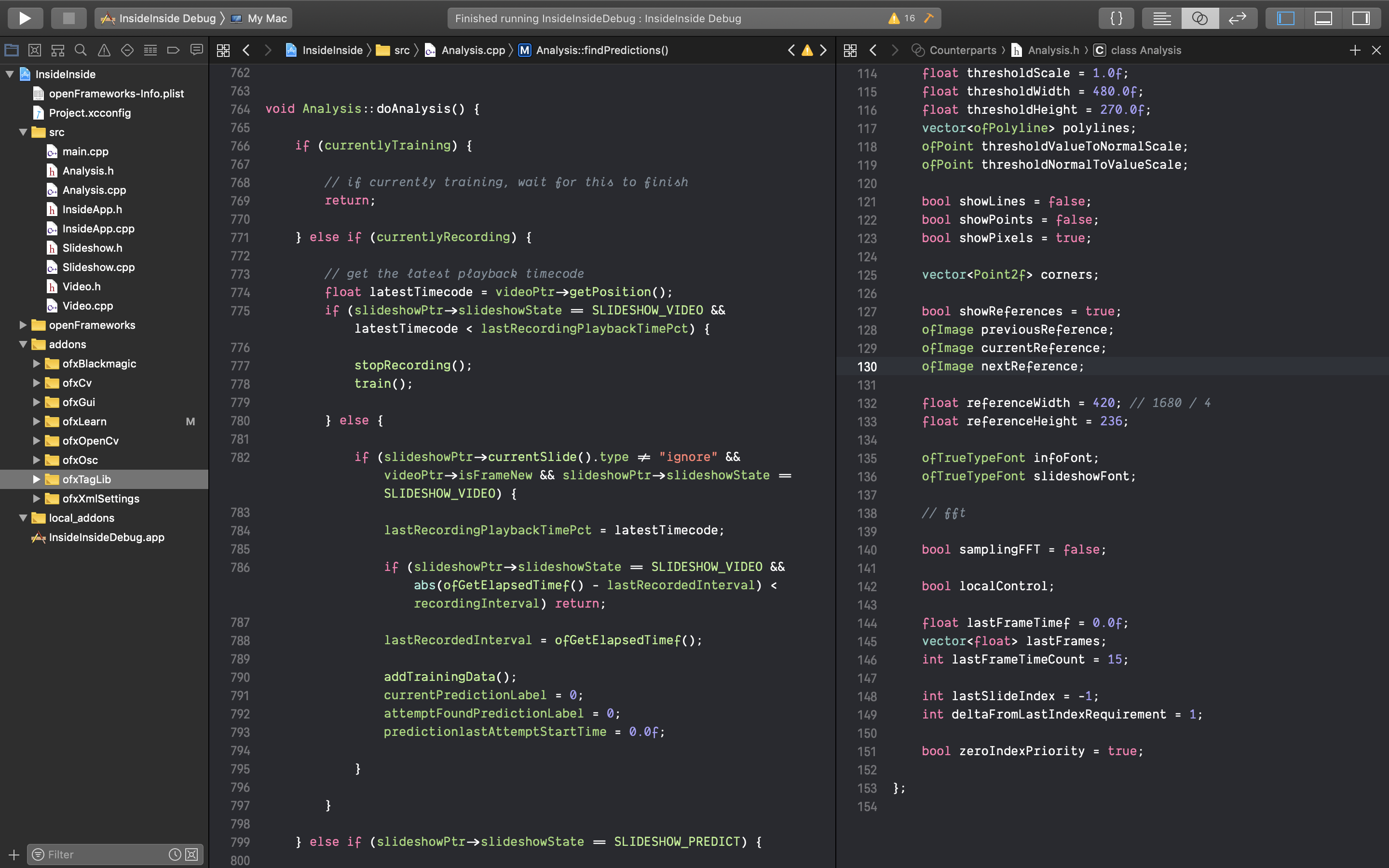

At the heart of this installation lies a neural network, designed to take video game imagery as its real-time input and to output images from a database of images. The full list of images in this database are listed below.

This machine learning dispositif is not based on large, GAFAM-controlled, neural network training sets. It is, instead, designed entirely from the ground up, using standard C++ and APIs from the open-source OpenCV library used to break down the images into their component (machine analysable) parts. The system also uses OpenCV’s most recent implementations to “train” during the machine “learning” phase, where it creates the mechanically-determined image-to-image relationships. The system uses both contemporary, cutting-edge neural network and deep learning methods (e.g. convolution kernels), as well as image filtering techniques dating sometimes as far back as the earliest algorithms of computer signal processing.

The advantage of this approach is that while the datasets can be large — in fact very very large and taking days, even weeks, to train —, they are not on the nation-state energy consumption scale of datasets such as the publicly-contributed/privately-owned datasets of Google, Facebook, or Apple. This is very much bespoke A.I., solely designed, developed, and trained on this specific set of film clips, and this specific game. Not only can smaller, bespoke A.I. allow for less energy consumption, they can also be built into fully dedicated smaller, cheaper, open-source hardware designs. As a result, the analysis and playback engine was entirely built using open-source “embedded” solutions, such as the Rapsberry Pi as well as other Linux-based open-source solutions.

Various components of this system have already been shared via the code-sharing ressouce Github, although more work is still required before making the entire codebase public.

Cf. https://github.com/abstractmachine

As previously indicated, this original system was designed around the game Inside by Playdead as a small — albeit complex — dataset, and a small dataset of 128 film sequences. The game “Inside”, was also chosen because of its paranoaic exploration of A.I. adjacent themes such as control systems (cf. below). The efficiency of this system, which has now been fine-tuned over thousands of training sessions, was only a first test-case, focused mostly on the prototyping and production of the physical hardware as well as the development of the neural network software platform. Now that the system works and has been successfully tested in an exhibition context, other game/cinema pairings are now in their development and testing phases. The ultimate goal being to develop further this curious hybrid, situated somewhere in between two mediums, but also somewhere in between media analysis, cultural remix, and critical gameplay.

These game/cinema pairings are quite varied in their complexity and scope, and may not all pan out. Some are based on syncrhonizing a single film, such as Svankmajer’s Alice (1988), with a single game, such as Miyamoto’s Super Mario Bros. (1986). Another single-sync paring, currently also in development, is matching the movement in Id Software’s 3D first-person classic Doom (1993) with the travelling sequences of Gus Van Sant’s Elephant (2003). Another, far more ambitious pairing is also in development, combining real-time analysis of the massive open-world Red Dead Redemption (2010 and 2019) franchise by Rockstar and using it to “search” for images in an equally massive collection of sequences from over fifty cowboy western classics such as The Naked Spur (1953), The Searchers (1956), Forty Guns (1957), For a Few Dollars More (1965), The Shooting (1966), Django (1966), The Wild Bunch (1969), High Plains Drifter (1973), Unforgiven (1992), to name just a few.

Algorithmic Cinema

Mur Communicant, collaborative media research project, Seconde nature, 2010

Cf. https://vimeo.com/17174658

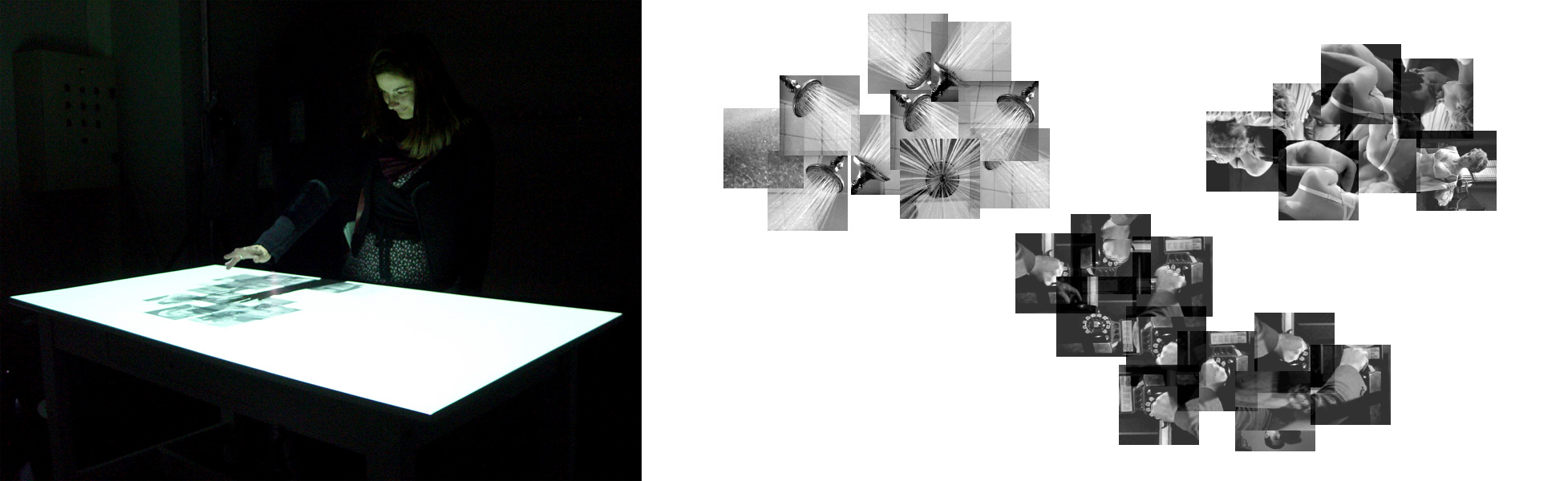

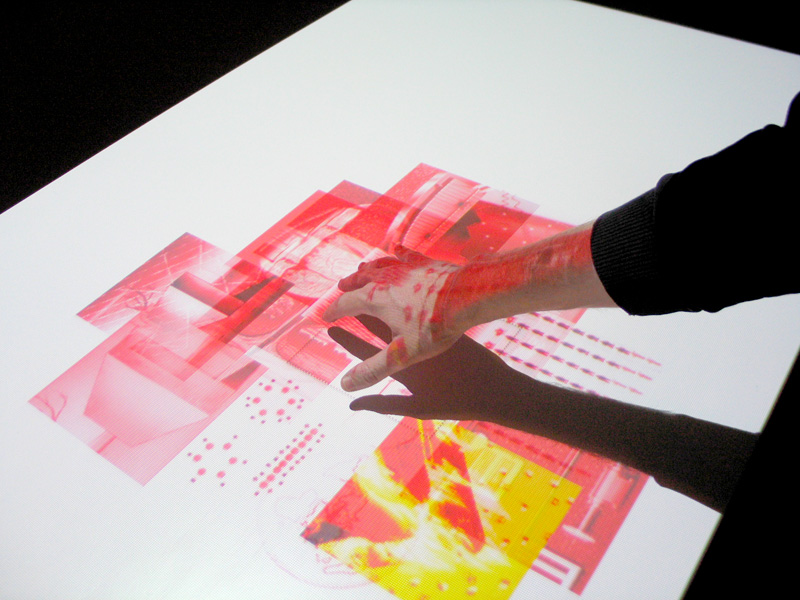

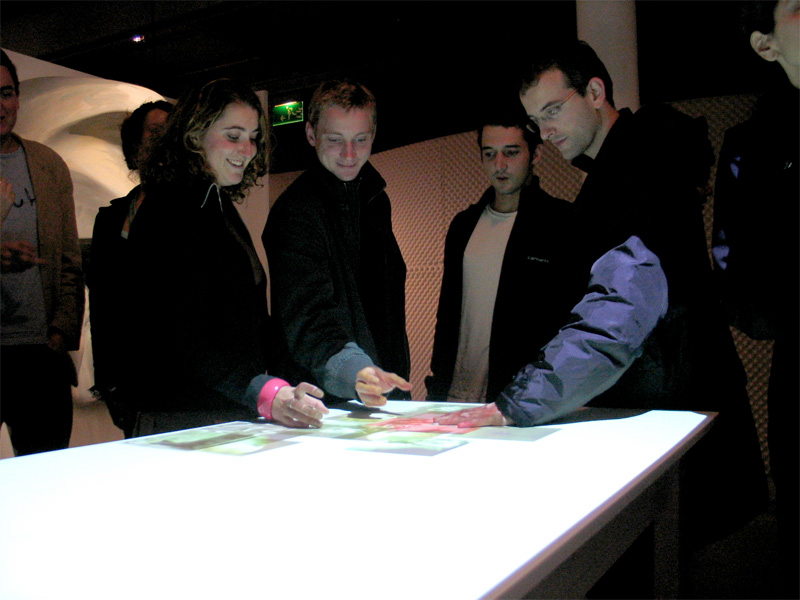

This project joins up with a branch of my work where I have been exploring over the past twenty years various forms of a nascent “algorithmic cinema”, through art installations, experiments, research, and development platforms. A lot of this work has involved inventing new forms of interacting and remixing narrative cinema, for example through the development of touch surfaces that would allow for the direct manipulation of moving image databases — a full five years before touch-based systems such as Apple’s iPhone or Microsoft’s Pixelssense. Another sub-branch of this research involved looking at how visual and conceptual non-linear interfaces could reimagine the traditional sequence timeline of cinema.

Object Machine, Villette Numérique, 2002

In 2004, I attempted to theorize this approach with the following text on algorithmic cinema:

We will use the expression “algorithmic cinema” not to describe the technical means by which images are created on computers (special effects, non-linear editing, etc), but instead to describe the transformations brought about by the algorithmisation of cinema in its most intimate aspects. “Algorithmic”, because code and programs are now controlling all aspects of playback: starting with the compression of the image, its distribution over networks, and its decompression in the spectator’s viewport. “Algorithmic”, because by introducing interactivity into the image, code can now affect the image itself, its means of unravelling, and grow inside of the original image another image, another form, another narration. “Algorithmic”, because search engines have now themselves begun seizing moving images — opening up the inevitable development of combinatory, and even generative, cinema. And finally, “algorithmic”, because algorithms both encompass and expand far beyond these new ingredients (programs, interactivity, generativity) and introduce into moving images new threads of reassemblage that do not emerge from the logic of image production. The scope of this transformation is so fundamental that in some near future, yet to be determined, these algorithmically transformed images will not be limited to what today we would consider a “computer”. (translated from french)

While this text, soon two decades old, makes many prescient observations, it is also somewhat over-ambitious in its claims on the future. In 2020, the peak of mainstream interactive cinematic experiences could be resumed by Netflix’s Bandersnatch experience, and little else. Despite interesting inroads coming from the world of 360° VR cinematography, the true shift towards a more combinatory, interactive and generative narrative — integrating more traditional cinematic narrative forms into algorithmic systems — is clearly emerging from the world of video games, and massive new inroads from the latest real-time rendering engines.

Concrescence, Festival Némo, Paris, 2004

The Signal, Centre Pompidou, 2006

Festival Mapping, Bâtiment d’art contemporain, Genève, 2011

Control Systems

Since its very beginnings, video games have established a kinaesthetic relationship between the body and the image, essentially inventing a new kind of audiovisual perception nervous system. Throughout the history of cinema, especially in the science fiction works of Michael Crichton (Westworld, Looker), as well as in the fever dreams of David Cronenberg (Videodrome, eXistenZ) and Shinya Tsukamoto (A Snake of June, Tetsuo), cinema too has sought to understand its own particular form of kinaesthetic influence on human perceptors, by exploring the technological, mythological and biological limits of this perceptual-emotional relationship. Long live the new flesh.

A common trope weaves in and out of these two mediums and their own filtering of this psycho-biological fascination: the image of control. In video games, the official mot d’ordre is interactivity, often poorly theorised as a form of control players have over the game. But this remote control system reveals another internal logic: that the machine, in turn, also controls its user. Players may start the game, but it is the game itself that decides when it is over. In the traditional cinematic apparatus, the physical passivity of the spectator is illusory; while in video games, the control the player wields over the image-world is only a subset of the overall functioning of the machine. Given the inverted nature of this illusion, both have developed their own narrative strategies for confronting it: mostly involving paranoiac stories of lonely individuals, caught up in the metaphorical and/or literal gears of some alien force, monstrous horde, invisible conspiracy, or impossible machine.

The installation Inside Inside juxtaposes these two mediums — cinema and video games — and attempts to reveal this imagined persecution, and how each medium dreams in its own way of the prosthetic powers of the image.

Referenced Works

- Game: Inside, Playdead

- Films & Documents: 2001: A Space Odyssey (1968), A.I. Artificial Intelligence (2001), The Abyss (1989), Alien (1979), Altered States (1980), Artaud, Pour en finir avec le jugement de Dieu (1947), Atari Mindlink (1984), Baxter (1989), Blade Runner (1982), Brainstorm (1983), The Blob (1958), Brazil (1984), Caspar David Friedrich - Der Chasseur im Walde (1814) & Frühschnee (1827), Close Encounters of the Third Kind (1977), Coma (1978), Dark Star (1974), Dark Water (2002), Dawn of the Dead (1979), ET (1982), Frankenstein (1931), Françoise Bonardel, L’anti-oedipe ou le corps sans organes (2014), Futureworld (1976), Gorillaz, Clint Eastwood (2001), Hard Hat Mack (1983), Invasion of the Body Snatchers (1956), Ivan’s Childhood (1962), Jaws (1975), Je t’aime, je t’aime (1968), The Life Aquatic with Steve Zissou (2004), The Matrix (1999), Metropolis (1927), Pikmin (2001), The Prisoner (1967), Ringu (1998), Scanners (1981), Sen to Chihiro no kamikakushi (2001), Kurt Vonnegut - The Shapes of Stories, Shivers (1975), Super Mario Bros (1985), They Live (1988), The Thing (1982), Thriller (1982), Titanic (1997), Towards a Cognitive Theory of Narrative Acts (2010), The Tripods (1984), Westworld (1973), White Dog (1982), Zabriskie Point (1970), eXistenZ (1999)