How can we re-appropriate and turn synthetic text-generation softwares into powerful tools for change and activism?

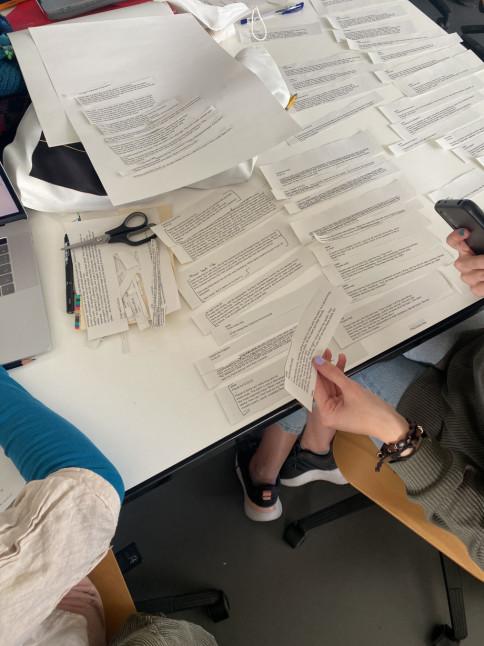

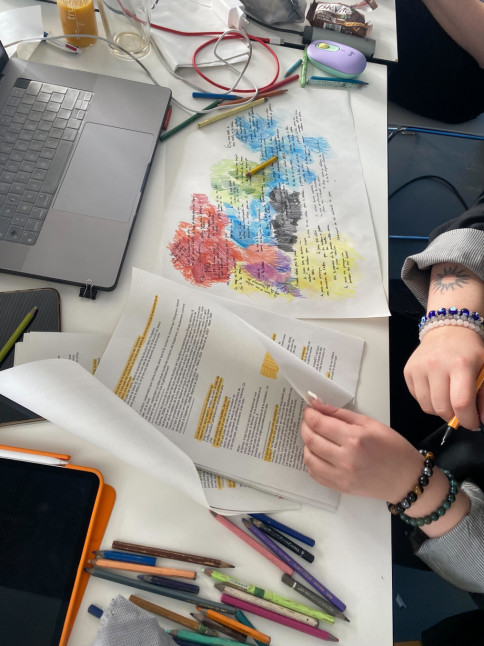

As the world loses its mind with the arrival of powerful AI mimicry chatbots, the Master Media Design department organised this one week workshop to help participants take a step back, explore at how such synthetic text generators work, how they are initially trained and how they can be reinvested using new bespoke datasets. Moving beyond the fear and the hype of these new technologies, participants were asked to collectively reflect and explore how these algorithms convey biases from their creators; biases that are located at the level of their datasets these technologies have been trained on. Then, the workshop invited participants to freely explore through hands-on techniques - using large sheets of paper, pen, scissors - how these technologies can be retrained and actively reappropriated for the making of textual outputs that are more representative of ourselves and identities.

- Workshop: Within one body there are millions

- Department: Master Media Design, HEAD – Genève

- Students: Léonie Courbat, Marine Faroud-Boget, Flore Garcia, Mariia Gulkova, Narges Hamidi Madani, Amaury Hamon, Margot Herbelin, Elie Hofer, Dorian Jovanovic, Tomislav Levak, Louka Najjar, Camilo Palacio, Faust Périllaud, Michelle Ponti, Hanieh Rashid, Tibor Udvari, Nathan Zweifel, Huiwen Zang

- Teachers: Sabrina Calvo, Douglas Edric Stanley

- Project Link: within-one-body-there-are-millions

From the brief:

Deliverables

- Design a textual world

- Build your own .txt dataset used for retraining a Generative Transformer

- Fine-tune interactions with this text generator

- This is a tool you are building for your own personal workflow

- Don’t worry about user-facing interactions

- You will use this tool during the Virtual Worlds labo with Joana Huguenin

What are we going to learn?

- Collecting, curating, a .txt dataset that can then be used to retrain a text transformer

- Developing a critical & intersectional reading on questions of bias, dataset, commons and how they affect AI

- Fine-tuning your text into a poetic expression of a world

- Cleaning (or to the contrary, dirtying) your dataset of extraneous elements, using classical natural language tools (find/replace, regex, NLTK, …)

- Setting up a Google Colab notebook

- Setting up and using models from huggingface.co

- Comparing different private & open source transformers: GPT-2, GPT-3, Bloom, OPT, Codegen, …

Presentation

Here is a link to the slides of the opening presentation: head-mmd1-onebodymillions-colab-keynote.pdf

- References

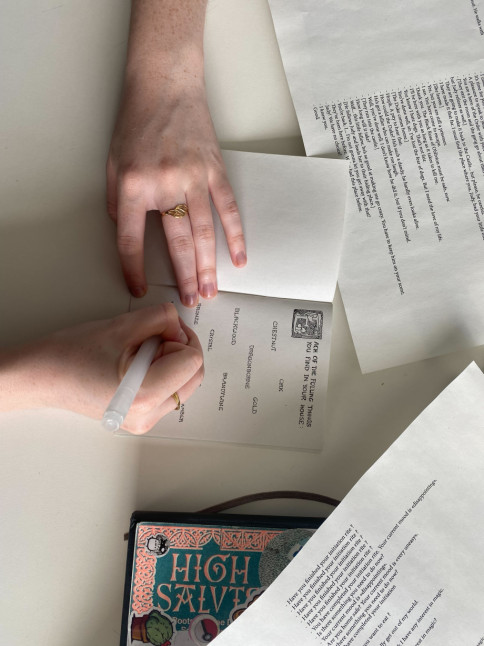

- I Ching, The Book of Changes

- Nothing Forever

- Electric Dreams

- Videodrome

- Consentacle: A Card Game of Human-Alien Intimacy

- Consentacle

- Fractal Time Wave

- Automatic Writing

- Tlön, Uqbar, Orbis Tertius

- Ressources/Tutorials

- GPT-2 Neural Network Poetry

- Colab + GPT-2 Retraining Checklist

- abstract machine-head-tutorial-gpt-2

- Tools/Datasets

- Regular Expression Text Parsing Examples

- Kaggle

- Gutenberg

- Shakespeare.txt (tiny-shakespeare-dialogues.txt, cleaned)

- Shakespeare.txt (full shakespeare, untreated)

- Archive.org

- Dreambank (A collection of over 20’000 dream reports)

- Huggingface

Here is an example of how one of these retrained texts was used as instructed, i.e. as a dataset that could then be used as a self-designed prompt/constraint for the following workshop on 3D modelling. This piece was exhibited at our Hybrid Strategies exhibition in San Francisco.

From the exhibition text:

- Title: magnalumina

- Designer: Elie Hofer

- Date: 2023

- Medium: Large language model, txt, 3d modelling

- AI: GPT-NeoX, Huggingface Transformers

- Workshop: Queering AI

- Instructors: Sabrina Calvo & Douglas Edric Stanley

- Department: Media Design

While the world lost its mind with the arrival of powerful AI mimicry chatbots, the Media Design Master program organized this one-week workshop to help participants take a step back, explore how such synthetic text generators work, how they are initially trained, and how they can be reinvested using new bespoke datasets. Moving beyond the fear and the hype of these new technologies, participants were asked to collectively reflect and explore how these algorithms convey biases from their creators; biases that are located at the level of their datasets these technologies have been trained on. By “queering” the baseline trainings, toxic words and their over-coded historical biases could then be released with care into new, more fluid semantic territories where binary suffering opens onto multi-chromatic poetic landscapes.

These retrainings were then used as bespoke, small-scale textual models that each student could use to guide them to build a virtual world using classical 3d modelling tools. For his project Inner Composition, Elie Hofer trained his own interfaith chatbot using a huge repository of historical descriptions of architectural structures from multiple religions. As Elie designed his 3D virtual world, he consulted his personalized textbot for guidance on what to model next, creating a collaborative process where the chatbot assisted in the worldbuilding process.