- Workshop: playVision

- Organizers: Douglas Edric Stanley & Stéphane Cousot

- Location: Atelier Hypermedia, Aix-en-Provence School of Art

- Dates: January 8 - 18, 2008

- Partners: Transatlab, School of the Art Institute of Chicago, M2FCréations, Hacking Lab, [Atelier 3D][http://www.ecole-art-aix.fr/-Atelier-Son-], Locus Sonus

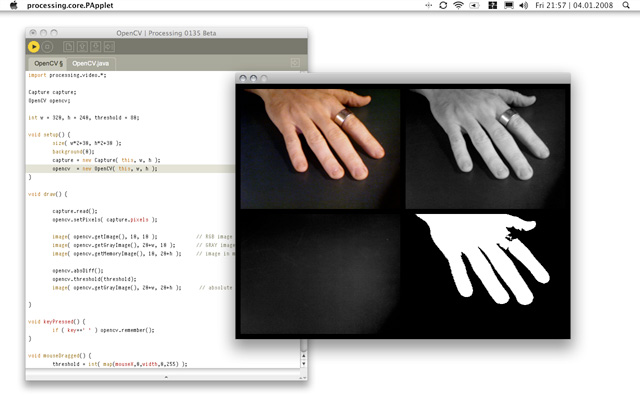

I’m a little giddy this evening, because Stéphane Cousot and I finally got a first very-alpha version working of the OpenCV library for Processing. We’re building this library for a workshop that begins next week and will eventually finish with a game to be installed at the Gamerz 0.2 exhibit on January 15th in Aix-en-Provence. After that, we’ll join up with the other groups working within the context of the Transatlab workshops throughout all of January, and see if we can’t put our OpenCV library to good use in their projects.

As for the library itself, talking back and forth from Processing to OpenCV turned out to be, as expected, quite a pain. Basically, we have to write bridge-code to all the OpenCV functions that we want to use, and implement inside of the JINI-side of the library all of the code required for manipulating and storing the image analysis data. Yikes. In fact just getting that Java-C++ bridge right without any memory leaks or other hazards was 90% of what took us the couple of days we've put into it so far. I’m also a bit obsessed with keeping the code as fast as possible (i.e. with as little communicated data as possible between the two environments), since native processor speed is one of the reasons you'd want to work with a library like this with Processing.

The original plan was to base our work on the fine code created by those wonderful people over at OpenFrameworks, and just add some JINI wrapper for Processing. Yeah right. Turns out you can't just shoehorn these things like that, so we had to go back to the Intel source code and work from there. All was not lost though: we've been able to use OpenFrameworks as a cheat-sheet, and more importantly to try to match their methods to ours. We couldn't make it 100% compatible, so you won't be able to just copy your Processing code over. But we're at least trying to keep the two projects as close as possible, so that people can take the essential routines from Processing and port them over to OpenFrameworks without too much hassle. So far we’ve found it pretty easy to go in this direction (it's a fabulous way to learn C++, by the way), so why not return the favor and get inspired by OpenFrameworks to generate some code for Processing for a change?

For those that might be interested in this library, know that we’re working on it, but also that we don’t have any specific time-frame for releasing it. As Stéphane and I did for the UDP library, we’ll just fine tune it until it works within the context of the atelier, and then Stéphane will probably clean it up and make it all spiffy as he usually does.

That said, if you want any specific functions from the OpenCV library to be put at the top of the list (i.e. while we still have time to work on this project), let me know -- perhaps in the comments here? As I mentioned above, we're beginning with a simple demo-to-demo match of the OpenFrameworks example, and then we'll maybe roll in some of the face detection behavior currently offered in the Face Detection library by Jaegon Lee. That's a big maybe, because that library already works. But in the end, since it's merely just a wrapper to a specific Mac OS X port of OpenCV, it would probably be good to just go back to the original OpenCV framework for Mac+Win+Linux compatibility. Anyway, edge/blob detection & face tracking are the first two on our list, along with all the fun little extra gadgets that come with making those two aspects work. Any others?